Neuroscience reasons for what AI cannot do (yet)

When we put people into brain scanners, we see two distinct brain patterns, which I’ll call “near mode” and “far mode”.1

The first brain pattern, “near mode”, is what happens when you focus on concrete tasks with clear solutions, like answering “What is 12 plus 34?” or “What’s the fastest way to get to the grocery store?”

The second brain pattern, “far mode”2, occurs when you think about big-picture, long-term issues that don’t have correct answers (but do have wrong answers) like “What are some reasons for the decline of the Roman Empire?” or “Think of as many uses as you can for a brick”.

It’ll be easy at first to think of using a brick for building, bludgeoning, or balancing, but soon you’ll have to really stretch your brain to come up with uses like “putting it into a toilet cistern to save water”, “using the holes to measure spaghetti”, or “as an exfoliant for feet”.

Current AIs are good at near mode, but they fundamentally cannot do far mode well (for now), because of how they are trained.

To train an AI, you have to give it reward for correct answers and error for wrong answers, so the algorithm learns to do things that get more correct answers—like rewarding a dog when you teach it to sit.

This results in AIs that are very good at near mode jobs: repetitive tasks, following set procedures, and making decisions based on concrete information with clear answers.

But far mode jobs require you to go beyond the obvious and come up with unexpected, creative ways to solve problems—something the AIs cannot do right now because we don’t know how to train AIs without giving it examples of correct answers.3

Near mode is for AI, and professionals

If you have a near mode job, you’re at risk of being replaced by AI4—but this doesn’t mean that near mode skills no longer matter.

Google replaced memorization—these days it seems like school is useless for making you memorize specific facts, dates, or other trivia that could easily be looked up.

But would you rather be flown by a pilot who’s memorized every knob in their plane, or would you rather be flown by a random person using Google to look up what each button does?

Modern airplanes are so automated that they are almost impossible to stall, and the pilot’s job is focused mostly on navigation, communication, and emergencies, leaving the job of balancing fuel to the computers. But a good pilot still knows how to manually balance the fuel if needed.

Most jobs contain a mix of both near and far mode.

If you’re a software engineer, the AI is very good at taking your near mode job of coding boilerplate features that most websites need: a login page, notification management, and a user settings page.

But your far mode job is to think about the broader implications of the code. You can’t just ask the AI to add a “remember me” feature to your login page, because you have to consider the security ramifications of when to not remember the user, what circumstances you would log them out, and re-think assumptions you’ve made elsewhere in your app about who is logged in that may now require additional authentication.

Debugging skills are more valuable than coding skills. Anyone can write code, but the more difficult job in software engineering is understanding which hypotheses are worth testing. AIs can help you identify bugs, but it doesn’t understand assumptions you’ve made that aren’t encoded into the logic.

Far mode is your unique advantage

If you can find ways of offloading the near mode parts of your job to AI, so you can focus more on far mode, you’ll be more productive and spend more time doing what you’re uniquely good at.

To do this effectively, we have to understand how creativity works.

If you explicitly ask the AI to do something creative, the AI will do something that looks like far mode, but only if you prompt it to do so via step-by-step instructions first, which is near mode! Similarly, the temperature setting doesn’t change how creative the AI behaves, it merely increases the randomness of its responses.

Offloading near mode to the AI

In the rest of this essay, I’ll share some techniques I use to offload the near mode parts of my job to AI, so I can focus on far mode.

Writing

Unless you are a freak-of-nature-genius-rapper like Eminem, you’ll have a hard time coming up with rhymes or synonyms for words; I spend a lot of time looking for fun, unexpected turns of phrases to make my writing more memorable.

But AIs are very good at finding synonyms, connected ideas, and related words (including rhymes!) because of embeddings.

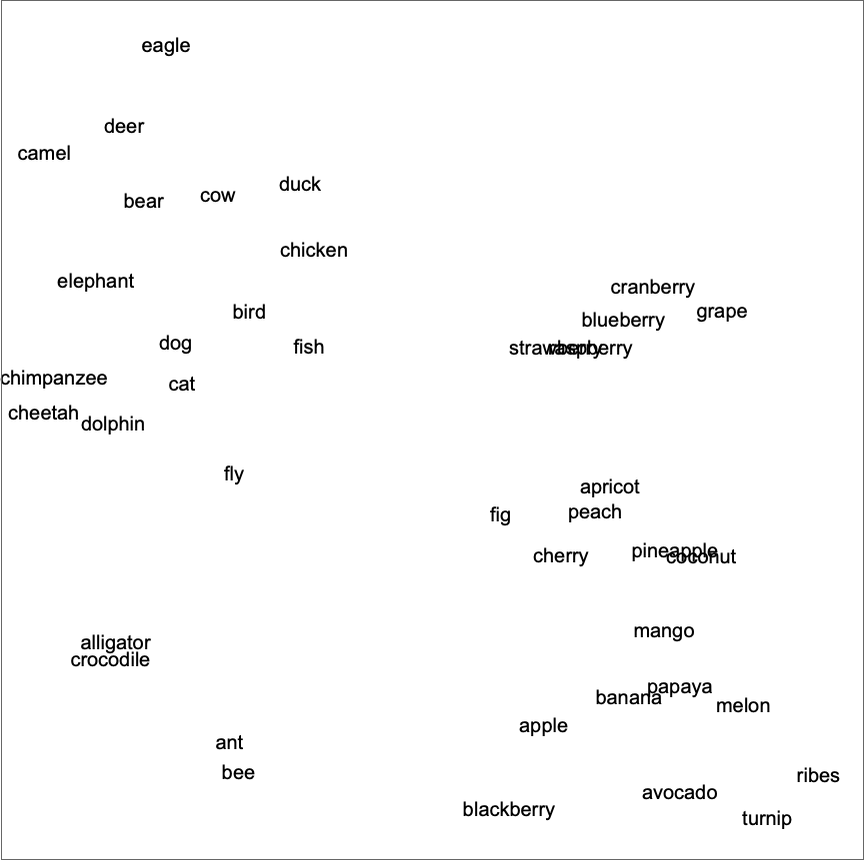

AI embeds words or images as a set of numbers (vectors) that capture semantic relationships. So words with similar meanings live closer together, and words with different meanings live farther away.

Notice how animals like bear and cow cluster together, away from the cluster of fruits like banana and melon.5

Notice how animals like bear and cow cluster together, away from the cluster of fruits like banana and melon.5

In actuality these embeddings have billions of dimensions, not just two, so the AI can search across dimensions that you’ve never even considered.

But AI struggles to write with surprise, vivid metaphors, or humour—which makes it BORING. That’s because you can find “poetic” descriptions that are not obviously related in terms of embedding distance—this is a far mode task.

Paul Graham describes the joy of working on a personal project to be like skating:

Working on a project of your own is as different from ordinary work as skating is from walking. It’s more fun, but also much more productive.

The word skating is not related to the word working in any way—AI is never going to learn an association between skating and working, as they are entirely dissimilar concepts, but it works so well in this context because it captures the feeling of getting to a destination faster than walking while having more fun at the same time! All while being incredibly succinct!

AIs are trained on the general Internet which is an aggregation of everyone’s opinions, so the AI ends up with an average, mainstream perspective. That’s why AI writing sounds so boring.

However, as you are naturally unique, AI can help you connect your perspective to the mainstream view better, to broaden your communication for a wider audience and make your examples resonate with people who had life experiences very different from yours.

Earlier in this article, I gave this example:

If you’re a software engineer, the AI is very good at taking your near mode job of coding boilerplate features that most websites need: a login page, notification management, and a user settings page.

This makes sense to software engineers, but not to people with other jobs, so I had the AI help me convert that example to a range of different careers so you can pick the one that resonates with you.

Summarizing

I don’t usually use AI for summarization because it only lists facts (which is okay, if I just want to quickly skim to decide if the content is relevant6) and doesn’t really understand what information is valuable.

Made to Stick has an example where students were told:

Ken Peters, principal of Beverly Hills High School, announced that the entire faculty will travel to Sacramento next Thursday for a colloquium on new teaching methods. Among the speakers will be anthropologist Margaret Mead, …etc.

If you ask AI to summarize this memo, it’ll just reduce the length into bullet points:

But the AI completely missed the most valuable point for the students—which is, “There will be no school on Thursday!”

The AI doesn’t understand that if all the teachers are travelling, that means the school will be closed. All it can do is compress the content and regurgitate facts—it’s up to you to figure out what is the point?

Syntopical reading

You can read about 30 pages per hour, but if you want to read syntopically7 across multiple sources, you’ll need weeks or even months to digest dozens of books to grasp multiple perspectives on a topic.

AI is very broad but it is not deep. AI is trained across the Internet, books, and encyclopedias, so it can explore adjacent topics and concepts at a high level. Information that would usually take days or weeks to get, you can access in just a snap. This makes the initial stages of research easier, as it’s very good at proposing high-level lines of discovery.

But encoding all this data means that the AI doesn’t understand deep facts about specific topics unless they’re already widely known. For example, if you ask AI about a more obscure book, it’ll just make things up based on the title and its general thesis, without connecting to the book’s content or the evidence that it presents.

However, AI models that are fine-tuned to specific subject areas can go deeper, and techniques like RAG can give you sources that you can follow up with deeper investigation yourself.

Perhaps in the future, larger AI models will be able to store deeper insights, but for now you’ll need to discover these insights yourself, while AI can help you fill in your knowledge gaps faster.

The AI is only as smart as you pretend to be

If I ask ChatGPT “I have a cold, what should I do?”, it will give me basic tips on how to deal with a cold, like resting or staying hydrated.

But if I say “A patient is presenting with symptoms of acute viral nasopharyngitis…” (the medical name for the common cold), it will give me a longer, more detailed response—even suggesting specific medications to take.

If you talk like a doctor, the AI will reply like a doctor.

But the AI companies implemented safeguards to avoid giving legal or medical advice to the general public, so if you talk like an average civilian, the AI will censor itself more and give you less helpful information, as your words do not cluster together with the professional jargon that experts use.

The AI models score highly on standardized tests like the LSAT (for lawyers) or USMLE (for doctors), so it obviously has the knowledge, but to unlock that knowledge, you have to pretend that you’re on the same level as the AI by using the same professional jargon, so it assumes that you’re part of that same world.

If you want to be smarter, you can start by pretending to behave as a smart person would, even if you might not have the self-confidence yet. Maybe you’ll try reading more books, or tell yourself “I’m the kind of person who likes to learn something new every day.”

Although you’re just faking it, performing “being smart” will eventually become a part of who you are8, and then you’ll actually get smarter as a result, with the AI helping you along the way!

I only publish half of my writing publicly. You can read the rest of my essays on my private email list:

Subscribing is free, no spam ever, and you can safely unsubscribe anytime

Footnotes

-

I talk about this more in my undergraduate research thesis, How To Get More Creative. ↩

-

In the literature, this is called the Default Mode Network. ↩

-

Another example is choosing a university major. Near mode would be just looking at specific course requirements, job placement rates, and graduating salaries—very practical. But in far mode, you’ll reflect on many variables unique to you. Is this something you can stick to long-term? While there’s no right answer, there are wrong answers—you don’t want to pick a career that neither makes you happy nor pays well. ↩

-

For example, we know that AI improves the performance of lower-skilled workers more than higher-skilled workers, implying that lower-skilled jobs with well-defined outcomes are more replaceable with automation. ↩

-

Image credit: What Is ChatGPT Doing … and Why Does It Work? ↩

-

In a previous essay I talk about why skimming is not always good, especially when you skip the process of discovering insights for yourself. ↩

-

From How to Read a Book. ↩

-

via identity-based habit formation, one technique from Atomic Habits. ↩