Machine Learning projects at the University of Texas at Austin

In my Master’s of Computer Science at the University of Texas at Austin, I completed coursework focused on deep learning, computer vision, reinforcement learning, parallelization, linear algebra, and optimization. Below is a selection of projects from my coursework.

Computer Vision Projects

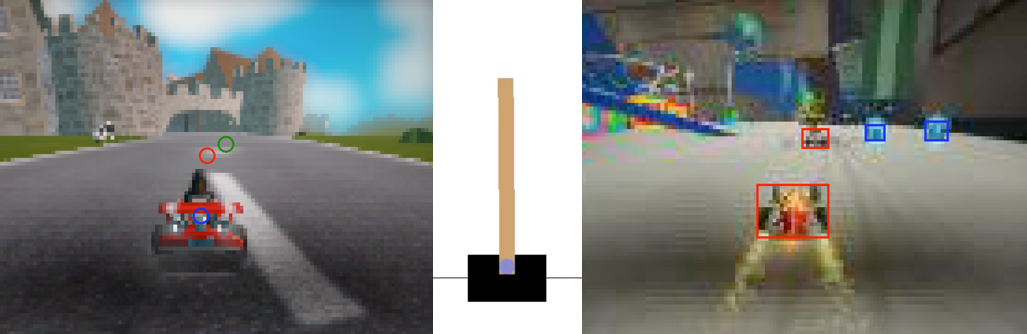

In our Deep Learning class, we built deep neural networks in pytorch to develop vision systems for the racing simulator SuperTuxKart, which is an open-source clone of Mario Kart. Below are two selected projects from the course.

Self-Driving Go-Kart Racer

I built an agent which uses computer vision to drive a go-kart autonomously in the SuperTuxKart game using only the screen image as input; it has no other knowledge about the game.

The model is built in pytorch and is a fully convolutional network using an encoder-decoder structure with batch normalization to process the image and predict an “aim point”, which is an xy-coordinate of where the kart should drive. I coded a controller to handle the steering, acceleration, braking, and drifting of the kart based on this predicted aim point.

Autonomous Hockey Player

In a team with three other classmates, we built two autonomous hockey-playing agents in the SuperTuxKart game to score goals against opposing teams from our class. The instructors ran a tournament between every team’s agents and we finished in 3rd place among 16 teams.

Our general strategy was to have one agent focus on scoring the puck into the goal, and leave the other agent to focus on tracking the puck location as accurately as possible and communicating this information to the first agent. This strategy is built around the idea that one agent with perfect information would perform better than two agents with imperfect information.

Each agent processes its camera input using a fully convolutional network that we designed similar to the U-Net architecture with residual connections from ResNet. This allows our agents to detect the puck location and drive behind it to dribble the puck into the opponent’s goal.

Reinforcement Learning Projects

In our Reinforcement Learning class, we read the textbook Reinforcement Learning by Sutton and Barto and implemented several RL algorithms to solve problems from the book. Below are two selected projects from the course.

I also wrote an in-depth review of what I learned from the textbook, and posted my detailed notes on my bookshelf.

Cart Pole

I coded an agent to solve the CartPole problem from OpenAI Gym. In CartPole, a pole is balanced atop a cart and the agent moves the cart to prevent the pole from falling. The agent gets a reward of +1 for every timestep that it keeps the pole upright.

My agent uses the REINFORCE policy-gradient learning algorithm, with a baseline function. To estimate the policy function, I trained a neural network in pytorch with linear layers and ReLUs to predict the best action based on the current state.

Mountain Car

I coded an agent to solve the MountainCar problem from OpenAI Gym. In MountainCar, the agent tries to drive a car up the mountain to the right starting from the valley below. However, the car’s engine is not strong enough to drive directly up the mountain, so it must drive back and forth up to build up momentum.

I solved the problem 3 times using different techniques:

In the first solution, I implemented an n-step semi-gradient TD(0) algorithm to determine the action at each state. To estimate the value function, I implemented tile coding with linear function approximation.

In the second solution, I implemented n-step semi-gradient TD(0) again, except this time I performed policy evaluation by training a neural network in pytorch with linear layers and ReLUs to estimate the value function.

In the third solution, I implemented the true online Sarsa\((\lambda)\) algorithm to generate a state-action function directly, and used tile coding to generate the feature vector.